Lifestyle

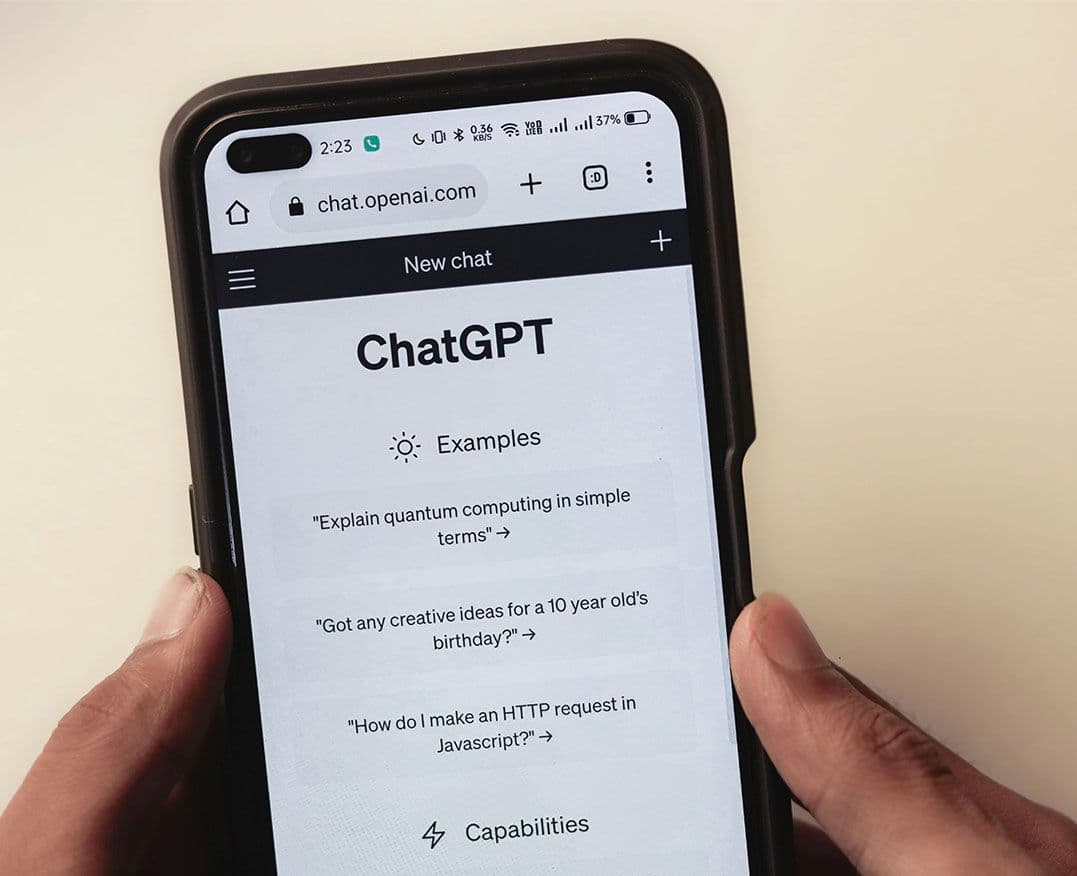

Protect Your Privacy: What Not to Share with ChatGPT

Many individuals now rely on ChatGPT for a variety of tasks, from drafting business emails to seeking relationship advice. While this artificial intelligence tool can significantly enhance productivity, experts warn against sharing personal information due to potential privacy risks. Once you input data into the chatbot, you essentially lose ownership of that information, according to Dzheniger King, a researcher at the Human-Centered Artificial Intelligence Institute at Stanford University. Prominent companies like OpenAI and Google also advise users to refrain from entering sensitive data, as highlighted by The New York Post.

Essential Personal Information to Keep Private

It is crucial never to disclose information that could directly identify you. This includes personal identification numbers, driver’s license details, passport information, and even your date of birth, home address, and phone numbers. While some chatbots may attempt to mask such data, the safest practice is to avoid entering it altogether. A representative from OpenAI emphasized, “We want our AI models to learn about the world, not about private individuals, and actively minimize the collection of personal data.”

Sensitive Medical and Financial Data

Unlike medical institutions that are bound by strict confidentiality regulations, AI chatbots do not adhere to the same standards. If you intend to use ChatGPT for interpreting medical results, experts recommend editing the document to remove all personal information, leaving only the test results.

Moreover, sharing your banking and investment account numbers in a conversation with AI can lead to severe consequences if a security breach occurs. Such information could potentially be exploited to monitor your finances or gain unauthorized access to your funds.

Similarly, while it may seem convenient to provide your login credentials to a chatbot for task completion, this practice poses significant risks. AI tools are not secure repositories for sensitive information. For password management, specialized tools like password managers are recommended.

Risks in Business Communications

Using publicly available AI tools for business purposes, such as drafting emails or editing documents, carries the risk of inadvertently revealing sensitive client data or internal business secrets. As a result, many companies have opted for dedicated business versions of AI platforms or have developed their own systems equipped with enhanced security measures.

Enhancing Privacy Protection

For those who still wish to interact with AI chatbots despite the associated risks, several steps can be taken to safeguard privacy. Securing your user account with a strong password and enabling multi-factor authentication can significantly enhance your security. Additionally, some tools, including ChatGPT, offer a “temporary chat” mode, allowing for anonymous conversations that do not save history.

In summary, while ChatGPT can be an invaluable resource for various tasks, maintaining privacy by avoiding the sharing of personal, medical, financial, and sensitive business information is paramount. Following these guidelines can help users enjoy the benefits of AI while protecting their own data.

-

Entertainment3 months ago

Entertainment3 months agoAnn Ming Reflects on ITV’s ‘I Fought the Law’ Drama

-

Entertainment4 months ago

Entertainment4 months agoKate Garraway Sells £2 Million Home Amid Financial Struggles

-

Health3 months ago

Health3 months agoKatie Price Faces New Health Concerns After Cancer Symptoms Resurface

-

Entertainment3 months ago

Entertainment3 months agoCoronation Street’s Carl Webster Faces Trouble with New Affairs

-

Entertainment3 months ago

Entertainment3 months agoWhere is Tinder Swindler Simon Leviev? Latest Updates Revealed

-

World2 weeks ago

World2 weeks agoBailey Announces Heartbreaking Split from Rebecca After Reunion

-

Entertainment4 months ago

Entertainment4 months agoMarkiplier Addresses AI Controversy During Livestream Response

-

Entertainment2 weeks ago

Entertainment2 weeks agoCoronation Street Fans React as Todd Faces Heartbreaking Choice

-

Science1 month ago

Science1 month agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Health4 months ago

Health4 months agoCarol Vorderman Reflects on Health Scare and Family Support

-

Entertainment4 months ago

Entertainment4 months agoKim Cattrall Posts Cryptic Message After HBO’s Sequel Cancellation

-

Entertainment3 months ago

Entertainment3 months agoOlivia Attwood Opens Up About Fallout with Former Best Friend