Science

Edinburgh Researchers Unveil System to Boost AI Processing Speed

Researchers at the University of Edinburgh have developed a groundbreaking system that could significantly increase the speed at which artificial intelligence (AI) processes data. This innovation may allow AI to draw conclusions from data up to ten times faster, positioning it to greatly impact industries reliant on large language models (LLMs), including finance, healthcare, and customer service.

Advancements in Wafer-Scale Computing

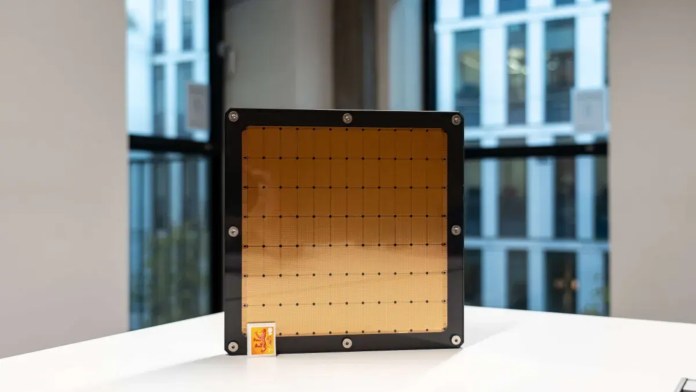

The discovery centers around advancements in wafer-scale computer chips, which are the largest chips available, approximately the size of a medium chopping board. While the potential of these chips has been recognized, current AI systems primarily operate on networks of graphics processing units (GPUs). These systems require information to traverse multiple interconnected chips, leading to inefficiencies.

In contrast, wafer-scale chips can perform numerous tasks and calculations simultaneously due to their size and extensive on-chip memory. This allows data to move more efficiently within the chip itself, reducing delays that occur when information must travel between smaller chips.

To address the software limitations preventing the integration of wafer-scale chips in AI applications, researchers at Edinburgh created a specialized software system called WaferLLM. Tests conducted at the EPCC, a supercomputing center at the university, demonstrated that the third-generation wafer-scale processors achieved a tenfold increase in response speed compared to a conventional cluster of 16 GPUs.

Energy Efficiency and Future Implications

In addition to speed improvements, the research indicates that wafer-scale chips are significantly more energy efficient. They consume approximately half the energy compared to traditional GPUs when running LLMs. This advancement aligns with the growing need for sustainable technology solutions in computing.

“Wafer-scale computing has shown remarkable potential, but software has been the key barrier to putting it to work,” said Dr. Luo Mai, the lead researcher and a reader at the university’s School of Informatics. “With WaferLLM, we show that the right software design can unlock that potential, delivering real gains in speed and energy efficiency for large language models.”

The findings from this research were peer-reviewed and presented at a symposium on operating systems and design in July 2023. Professor Mark Parsons, director of EPCC and dean of research computing at the university, praised Dr. Mai’s work as “truly ground-breaking,” emphasizing its potential to drastically reduce the cost of inference in AI applications.

In a significant step towards collaborative development, the research team has published their findings as open-source software. This allows other developers and researchers to create their own applications leveraging wafer-scale technology, fostering innovation in AI infrastructure.

The advancements made by the University of Edinburgh’s research team could pave the way for a new generation of AI capabilities, enabling real-time intelligence across various fields such as science, healthcare, and education.

-

Entertainment3 months ago

Entertainment3 months agoAnn Ming Reflects on ITV’s ‘I Fought the Law’ Drama

-

Entertainment4 months ago

Entertainment4 months agoKate Garraway Sells £2 Million Home Amid Financial Struggles

-

Health3 months ago

Health3 months agoKatie Price Faces New Health Concerns After Cancer Symptoms Resurface

-

Entertainment3 months ago

Entertainment3 months agoCoronation Street’s Carl Webster Faces Trouble with New Affairs

-

Entertainment3 months ago

Entertainment3 months agoWhere is Tinder Swindler Simon Leviev? Latest Updates Revealed

-

Entertainment4 months ago

Entertainment4 months agoMarkiplier Addresses AI Controversy During Livestream Response

-

Science1 month ago

Science1 month agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Entertainment4 months ago

Entertainment4 months agoKim Cattrall Posts Cryptic Message After HBO’s Sequel Cancellation

-

Entertainment2 months ago

Entertainment2 months agoOlivia Attwood Opens Up About Fallout with Former Best Friend

-

Entertainment3 months ago

Entertainment3 months agoMasterChef Faces Turmoil as Tom Kerridge Withdraws from Hosting Role

-

Entertainment4 months ago

Entertainment4 months agoSpeculation Surrounds Home and Away as Cast Departures Mount

-

World3 months ago

World3 months agoCole Palmer’s Mysterious Message to Kobbie Mainoo Sparks Speculation